Wireless virtual reality helmet yesterday, today and tomorrow!

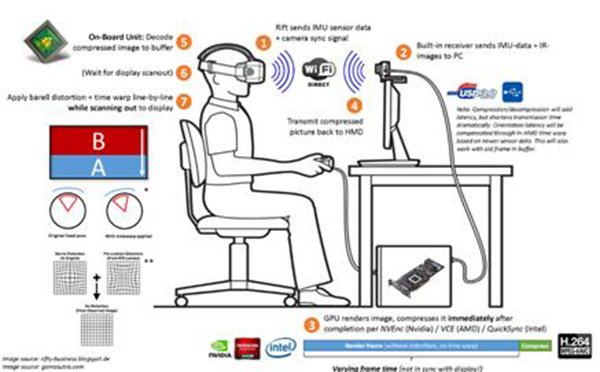

[Baidu VR article, reproduced please indicate the source] On November 11th, 2016, the most excited men and women who were enthusiastic about virtual reality were not invited to the goddess dinner, but the HTC Wireless Virtual Reality helmet. Yes, this day should be remembered as if the phone evolved into a cell phone overnight. Since Oculus DK1 detonated this virtual reality revolution, people's space requirements for VR experience have been constantly refreshed: desktop-level three degrees of freedom, desktop-level six degrees of freedom, stand-up interaction, room-level interaction, warehouse-level multi-person interaction, each upgrade Will bring more gameplay and a better immersive experience. As space requirements continue to grow, VR input devices are changing with each passing day, but VR headlines have been slow to develop. Palmer Luckey once stated at CES that the biggest obstacle to standing above the experience is electrical wiring. Is it really hard to cut off the wires on the VR helmet? Yes, because VR helmets are extremely demanding on technical specifications. The first is delay. The qualified VR experience requires the delay time of MotiontoPhoton to be within 20ms. After this time, it is easy to cause dizziness. Secondly, the resolution is the current mainstream resolution of VR headlamp is 2.5k (2560*1440). Under the viewing angle requirement, if the resolution is lower than this resolution, there will be a noticeable screen effect and the immersiveness will be impaired. With the rendering ability again, VR's binocular rendering will consume about 70% more GPU than the monocular rendering, and the rendering ability will almost double. Reduce picture quality. Although there are many obstacles in the development of wireless virtual reality helmets, we still see many solutions that have been proposed or will be proposed in the pursuit of wireless. I will comb these concepts from concept to technology to outline wireless. The virtual reality helmet yesterday, today and tomorrow. Yesterday One of the wireless virtual reality helmets yesterday, the mobile helmet. Yes, the mobile helmet is our earliest contact with a wireless virtual reality helmet. Mobile phone helmets started from Cardboard, went to experience the best GearVR, and then went to the all-in-one machine. With the mobile phone chip's mobilization, the mobile phone helmet was born wireless. The technology of cell phone helmets does not need to be described. The advantage is that the cost is low, and the disadvantage is the lack of native spatial positioning support and GPU performance is too low to perform complex scenes and high-quality rendering. The mobile phone helmet is under the constraint of the current mobile GPU performance. The best use scenario is panoramic video, and it is difficult to provide a high quality immersive interactive experience above standing. The earliest domestic production of mobile phone helmets was a storm mirror. In that era, VR, a serious lack of VR, became the enlightenment of China's wireless virtual reality helmet. The second of the wireless virtual reality helmets yesterday, streaming mobile helmets. Streaming mobile phone helmets can capture the video output of the virtual reality application running on the PC frame by frame. The captured results are encoded and compressed and then transmitted to the mobile phone helmet or the all-in-one machine via wifi. The mobile phone helmet or the all-in-one machine decodes the result. After output to the screen. The advantage of a streaming phone helmet is that it can use the powerful graphics resources on the PC to perform high-quality rendering of complex scenes. The disadvantage is that video encoding and decoding takes time. Overlaying wifi transmission will bring a long MotiontoPhoton delay, causing serious vertigo. ; And high-quality rendered images are significantly reduced in image quality after video compression. The earliest product of streaming helmet is TrinusVR. Trinus VR uses the CPU for video capture and encoding compression with transmission delays of up to 100ms, but it opens up a truly wireless era. Thanks to Nvidia's NvidiaVideoCodecSDK, we can directly call the GPU's nvenc to directly capture and hard-code the application's video output. Combined with the hard decoding of the mobile phone or Tegra platform, the video encoding compression and decoding time can be reduced to less than 20ms. Nvidia first applied this technology to the Shield handheld multi-screen game, but this technology was then used on a streaming phone helmet, which reduced the MotiontoPhoton latency of the streaming phone helmet to less than 40ms. Is VisusVR. Although the delay is shortened to 40ms, it still brings a strong sense of vertigo. The wireless virtual reality helmet of yesterday's third, backpack + wired helmet. Although the research and development of wireless virtual reality helmets lags behind, with the eagerness of people for a wide range of interactions, a compromise solution, a backpack + a wired helmet, was born. The first systematic solution to the proposed backpack + wired helmet business is Australia's ZeroLatency. The goal of ZeroLatency is to provide a warehouse-level multiplayer VR interactive solution that allows multiple players to enter a game scene at the same time. Want to do this must be connected to several levels: first is wireless, because the venue size is set to 400 square meters, so players must enter the scene wirelessly, cable is difficult to support such a large range of walking; followed by a delay, due to player needs Relatively long time playing, so in the game time can not give the player a noticeable sense of vertigo, delay control must be in the mainstream head water products, that is within 20ms; the third is the picture, in order to create a better game atmosphere, you must use the host The GPU performs rendering. The fourth is pose calculation. Since the player walks wirelessly, six degrees of freedom are required. The position calculation cannot rely on the inertial sensor and use the inexpensive optical scheme (60fps refresh rate), resulting in position calculation delay. At least 16.6ms, plus the wireless transmission delay will be greater than 18ms, so the greater effect of dizziness on the attitude calculation must be performed locally by the IMU at high sampling rate (1000Hz). ZeroLatency uses the backpack system designed by Alienware Alpha host and mobile power supply. The backpack and server use wifi to make data connection. The graphics card reaches the 970m level and uses DK2 as the head display cable to connect to Alpha, achieving the above four goals. Although this backpack system cannot be strictly called a wireless helmet but achieves the result that the wireless helmet can achieve, the disadvantage is that the backpack is not easy to wear and the battery life is not long. ZeroLatency's program has a profound impact on the subsequent virtual reality theme park. The most famous is the Void in Salt Lake City. TheVoid will be more extraterrestrial models for backpack systems and wired helmets, adding force feedback capabilities, and combining Optitrack's optical motion capture system to provide more accurate positioning calculations, more versatile virtual object interactions, and more. Human interaction systems have become more interesting. So far, the so-called "wireless virtual reality" solution has settled in the backpack + wired helmet + wifi, and it has become the preferred solution for warehouse-level virtual reality interaction and VR theme parks. Many domestic companies have also launched wireless backpack systems. MSI's backpack system Tongfang's backpack system Zotac's backpack system Today's article 2016 is not another year of virtual reality, but it is the first year of a wireless virtual reality helmet. There are two ways to achieve an ideal wireless virtual reality helmet. One is to wirelessly transmit the rendered video signal, and the other is to perform high-performance rendering in a helmet. Open today's key, 60Ghz communication Wireless transmission of the rendered video signal is the most direct way to implement a wireless virtual reality helmet. As early as in the streaming phone helmet era, people used such a method of performing high-performance rendering with a remote PC and outputting the video result to a helmet via wifi. The biggest drawback of this method is that the video signal must be compressed, because the quality of the original video data is at least 1920*1080@60fps, if the compressed data consumption bandwidth is about 3Gbps, and the fastest 802.11ac communication bandwidth is 1.3 Gbps, so no data compression encoding will not be able to transmit over wifi. As mentioned earlier, even with nvenc hard-coded and hard-decoded, the additional latency will be close to 20ms, which is a disaster for the VR experience. Fortunately, 60Ghz millimeter-wave communication has made great progress in the second half of 2015. The current WiGig 60Ghz communication can support 7Gbps communication at the maximum, which also makes it possible to transmit the video image rendered by the PC without raw data in compressed mode. Lattice is the world's largest supplier of 60Ghz modules. It provides modules that can perform near-distance (about 20m) wireless transmission of 1920*1080@60fps data. The first company to use 60Ghz for wireless virtual reality helmet design is Serious Simulations. Its wireless virtual reality head-up is mainly used for military training. Serious Simulations' Wireless Virtual Reality Headset uses two 1920*1080 displays for output to achieve a larger viewing angle, but is limited to a single module and the left and right eyes are duplicated. Since Lattice has produced 60Ghz related modules, why only a few teams have the ability to design wireless virtual reality helmets? There are many reasons: 1, screen. Lattice's module uses 1920*1080@60fps input and output specifications, so the need for video output to the module in the format of 1920*1080, after 60Ghz wireless transmission and then output to the screen in 1920*1080 format, which means that The screen must be able to accept landscape mode of 1920*1080 or less. Through the Panelook website, it is easy to find that the screen that supports the landscape mode is a minimum of 7 inches and cannot reach 1080p, but the 7-inch screen is obviously too large for the head display screen. The ideal head display screen is 5.5 inches, and the resolution must reach 1080p. This type of screen is mostly used on mobile phones. It is portrait mode, so it is necessary to convert the data horizontally and vertically to adapt to the portrait or dual screen mode. 2, line speed conversion. As mentioned above, in order to realize the screen transition without increasing the delay, it is necessary to perform line rate conversion on the output video data, which requires the helmet design team to have excellent high-speed video signal processing skills. 3, is still a delay. Take the horizontal and vertical screen conversion as an example. When the video data is rotated by 90 degrees, the following occurs: In the horizontal mode, the last pixel in the first line of the horizontal screen will just become the last pixel in the last line of the vertical screen mode, so that the conversion must be completed after caching a frame of image, which will increase the delay by nearly one frame. A better algorithm will be used to complete the conversion and avoid the delay caused by the cache. 4. Processing of input data such as inertial sensors. The current wired virtual reality helmet transmits the inertial sensor information in the helmet to the PC via USB to perform data fusion (fusion), acquires the helmet posture with the shortest delay, and then calculates the camera pose and renders the image. When the helmet's video output becomes wireless, the inertial sensor input must also be wireless, which to a certain extent requires the design team to have ultra low latency wireless communication skills and solid sensor fusion skills. The earliest domestic company that designed wireless virtual reality helmets was ZVR. ZVR launched a self-developed wireless virtual reality helmet in early 2016. ZVR's Wireless Virtual Reality Helmet offers a 1920x1080 OLED screen and integrates battery components in the back of the helmet. Since the helmet incorporates a 60Ghz module and only performs image conversion and output, the battery consumption time is constant and the official data can support 3.5 hours. Comparing with the ZVR's basic design, TPcast used the method of cooperation with HTC VIVE to solve the wireless problem of the wired virtual reality helmet with a plug-in module and played an easy-to-remember name: Scissors plan. Because VIVE uses its own packet format to transfer USB data, the first step TPCast makes is to obtain the USB data structure and disguise its VendorId as VIVE on the sending end of the connecting PC to implement DirectMode and support. Steam application loading. In addition, the resolution of VIVE is 2160*1200@90fps, which exceeds 1920*1080@60fps module communication data, so it is inferred that TPCast may change yuv444 format to yuv420 format to support higher resolution, and the actual refresh rate is reduced to 60fps. There is an inconsistency between the arguments made by Wang in the press conference that there is no reduction in image quality. Of course, this is an inference. It must wait for verification after delivery, but TPCast is definitely a solid step forward for wireless virtual reality helmets. High-performance embedded computing platform is not only one machine As early as before HTC released a wireless virtual reality helmet, Oculus disclosed a word of O wireless virtual reality helmet at its conference. According to limited information, Oculus's wireless virtual reality helmets do not use high-performance PCs. Calculations are performed directly in the helmet and the InsideOut positioning system is built in. This is more like a combination of a wired helmet and an all-in-one. Of course, specific implementation plans and use effects require more information to investigate. Whether using the wired all-in-one solution, the plug-in solution or the basic dry-up solution, the curtain of the wireless virtual reality helmet has been opened, refueling Oculus, refueling TPCast, and refueling ZVR! Tomorrow Is there a solution that is the ultimate solution for wireless virtual reality helmets? Of course, as long as the embedded GPU is powerful enough and the power consumption is low enough, the MINI console + helmet or all-in-one machine will be the ultimate solution, but this day may be long. So what's the solution for tomorrow? How to use the PC's powerful rendering capabilities, how can it achieve almost zero additional delay (at least it seems) and how to break the 60Ghz resolution refresh rate ceiling? It may be this program. The graphics rendering is still on the GPU of the PC, except that the output data is a larger image than the normal image perspective, similar to a panoramic video. The image is compressed according to a similar Facebook-based panoramic video compression method and encoded by the GPU. The compressed image is transmitted point-to-point through the wifi to a dedicated integrated machine with image hard decoding capability. After being decoded, the all-in-one machine is output to the screen as a quasi-panoramic video. Helmet calculates the current view angle according to the inertial sensor to output the panoramic image of the current view. Combined with the ATW user, the stable and time-delay viewport image can be seen as if the image is locally generated in the helmet. At this time, the encoded video stream in the background is continuously filled into the helmet with the delay of <20ms through wifi, so as to complete relatively high-latency position calculation. It is inferred that this solution is likely to be used by QuarkVR, which was previously cooperating with VIVE. let us wait and see. As someone has said, the tracking technology in virtual reality is not composed of a single sensor, but by combining different sensors to achieve effective work in different environments. This is also true for wireless virtual reality helmets, which need to combine different technologies to achieve high performance, high resolution, low latency, wide range, and multi-user requirements. powder coating Lamp Post, Garden Lamp Post, Steel Lamp Post, Galvanized Lamp Post YIXING FUTAO METAL STRUCTURAL UNIT CO.,LTD( YIXING HONGSHENGYUAN ELECTRIC POWER FACILITIES CO.,LTD.) , https://www.chinasteelpole.com