Cloud computing, big data and artificial intelligence understand the interrelationship between the three

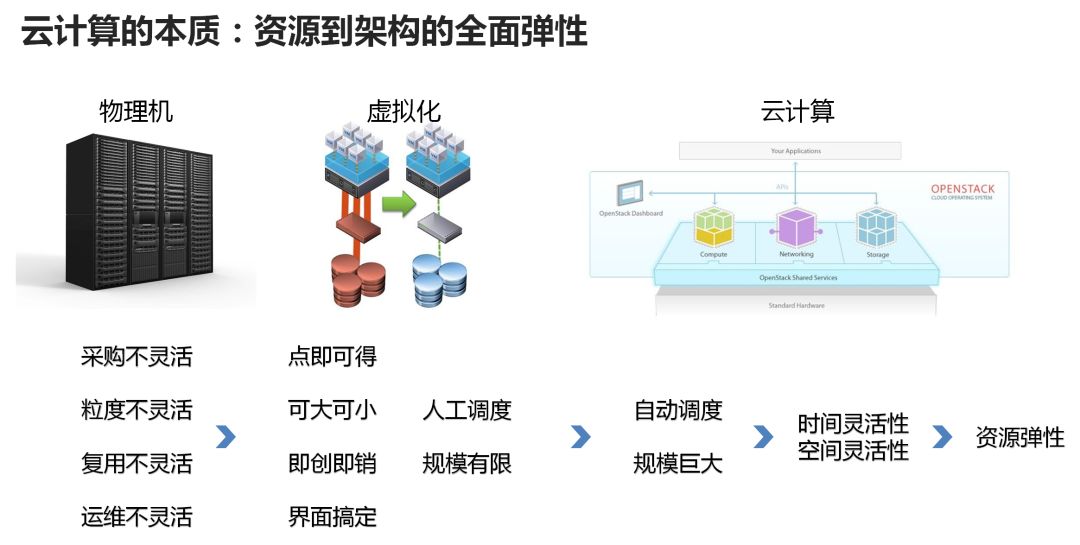

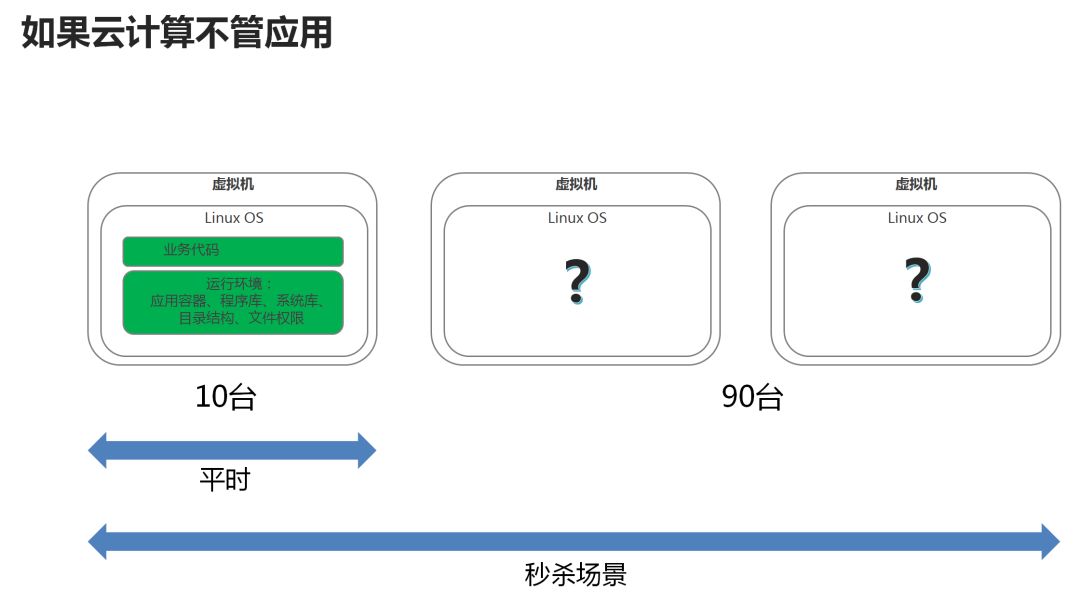

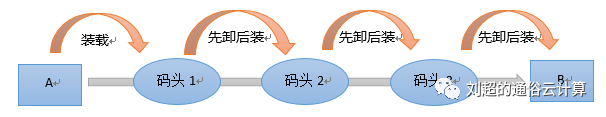

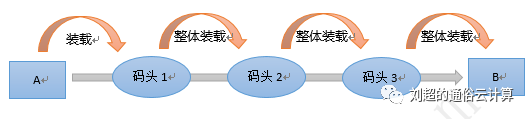

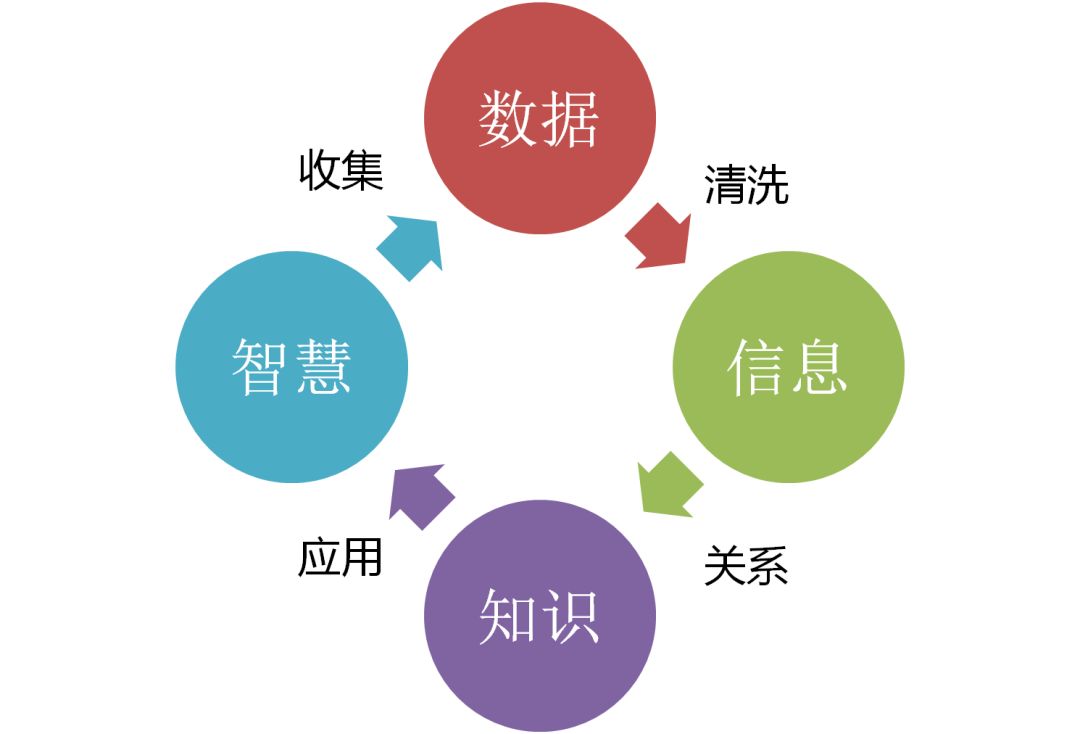

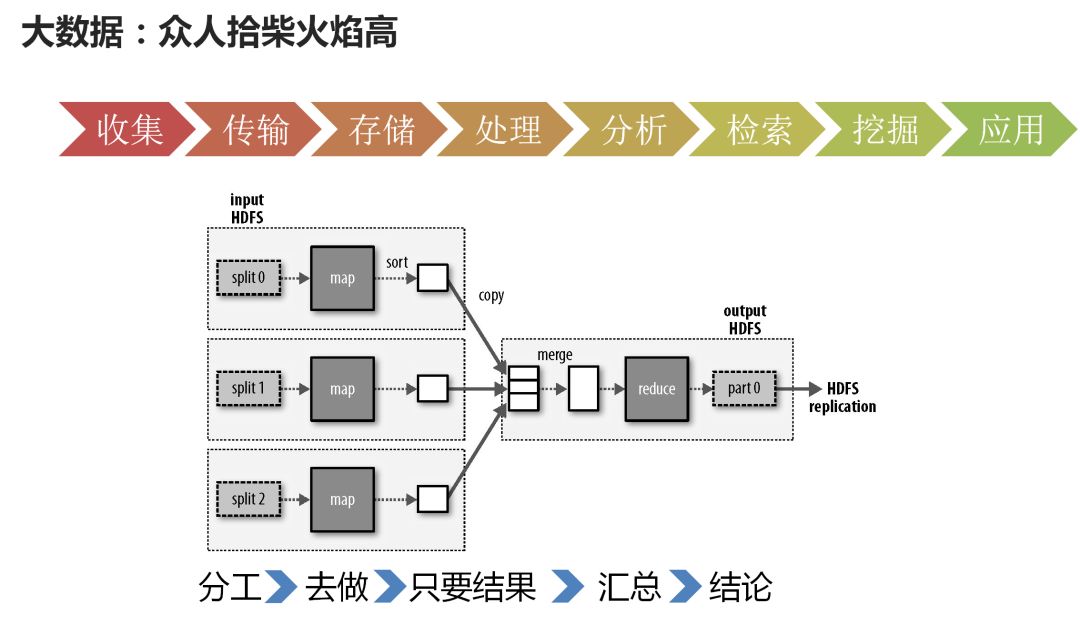

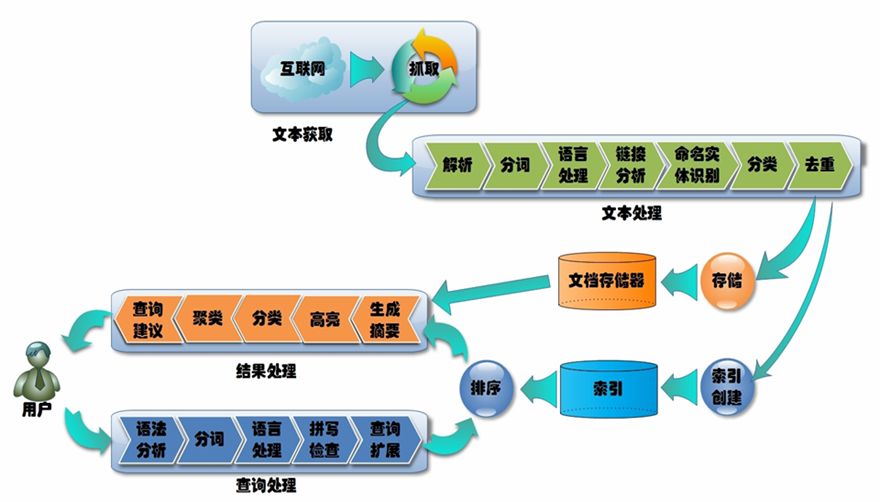

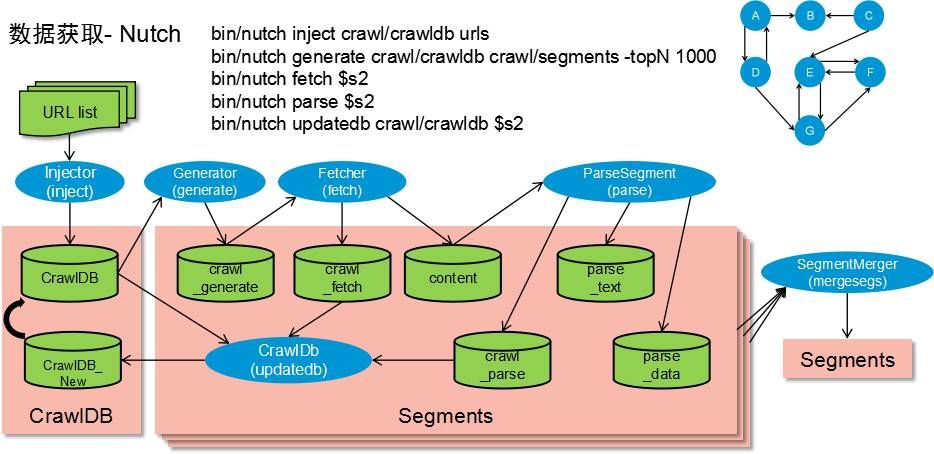

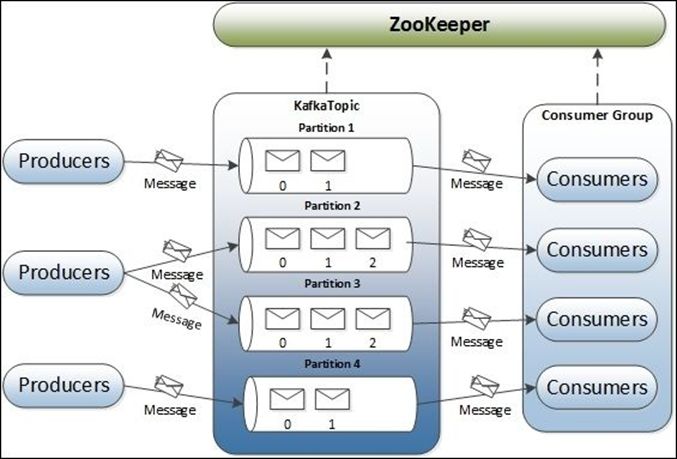

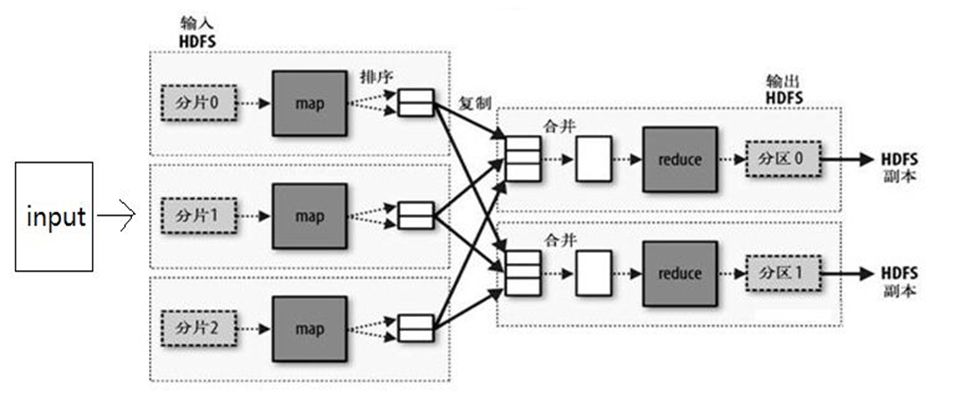

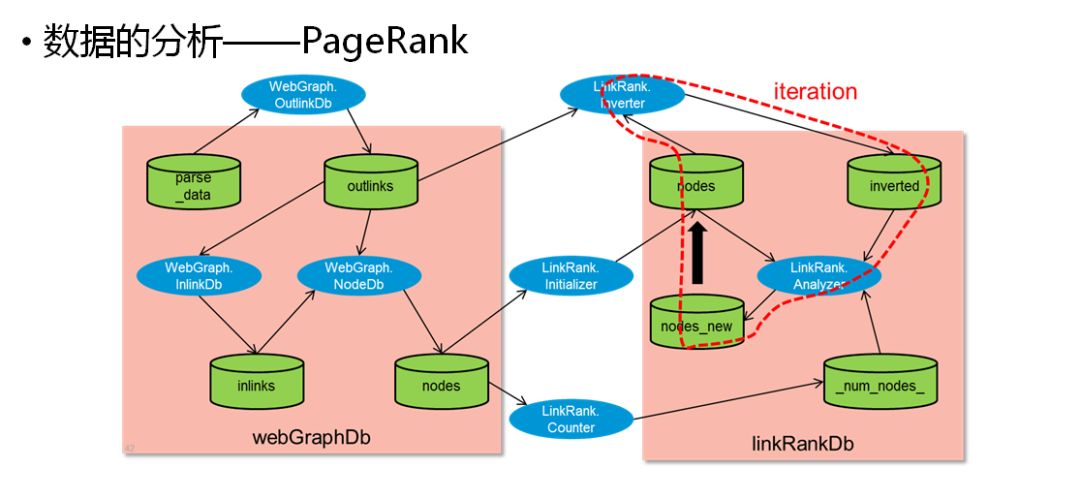

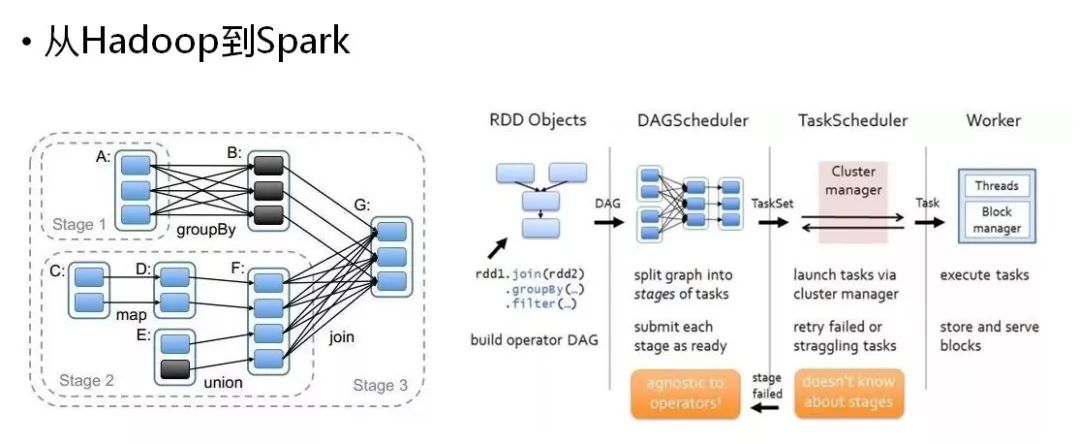

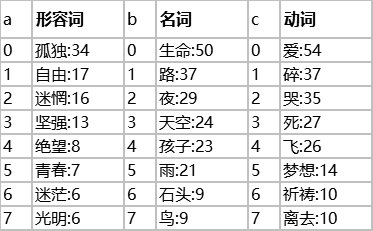

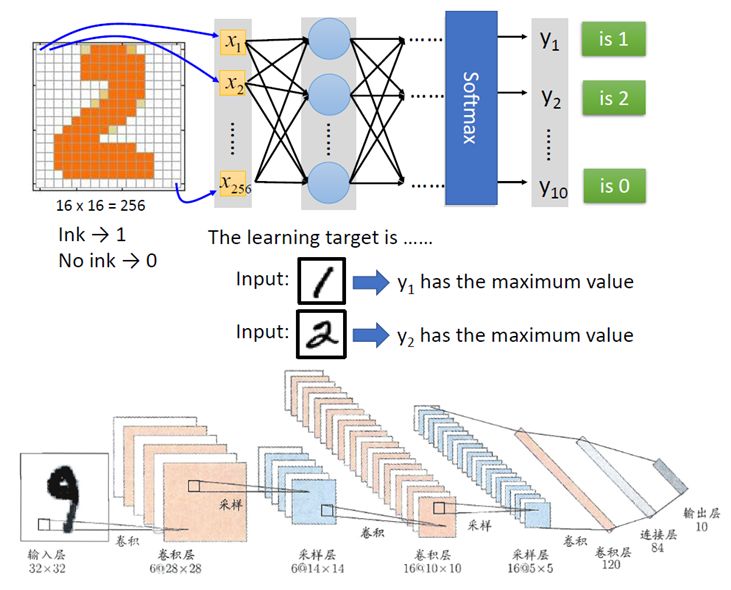

Today, I will talk about cloud computing, big data and artificial intelligence. Why do you talk about these three things? Because these three things are very hot now, and they seem to have a relationship with each other: when talking about cloud computing, when it comes to big data, when it comes to artificial intelligence, it will raise big data, when it comes to artificial intelligence, it will mention cloud computing... ...feeling that the three complement each other and are inseparable. But if it is a non-technical person, it may be difficult to understand the relationship between the three, so it is necessary to explain. First, the initial goal of cloud computing Let's start with cloud computing. The initial goal of cloud computing is to manage resources. The main management areas are computing resources, network resources, and storage resources. 1 tube data center is like a computer What is computing, networking, and storage resources? For example, if you want to buy a laptop, do you want to care about what kind of CPU this computer is? How much memory? These two are called computing resources. To access the Internet, this computer needs to have a network port that can be plugged into a network cable, or a wireless network card that can connect to our router. Your home also needs to open a network to operators such as China Unicom, mobile or telecommunications, such as 100M bandwidth. Then there will be a master to get a network cable to your home, the master may help you configure your router and their company's network connection. This way all your computers, mobile phones, and tablets can go online through your router. This is the network resource. You may also ask how big the hard drive is? In the past, the hard disks were very small, and the size was 10G. Later, even the 500G, 1T, and 2T hard disks were not new. (1T is 1000G), this is the storage resource. This is the same for a computer, the same for a data center. Imagine you have a very, very large computer room with a lot of servers, which also have CPU, memory, hard disk, and Internet access through router-like devices. The question at this time is: How do people who operate data centers manage these devices in a unified manner? 2 is flexible, you want to have it all, you want to have more The goal of management is to achieve flexibility in two areas. What are the two aspects? To give an example to understand: For example, if someone needs a small computer, only one CPU, 1G memory, 10G hard disk, and one megabyte of bandwidth, can you give it to him? Such a small-sized computer, now a laptop is stronger than this configuration, the home to pull a broadband to 100M. However, if you go to a cloud computing platform, he wants this resource, as long as it is there. In this case it can achieve two aspects of flexibility: Time flexibility: When do you want to be when you want it, when you need it, you will come out; Spatial flexibility: How much you want. Need a computer that is too small to be satisfied; need a very large space such as a cloud disk, the space allocated by the cloud disk to each person is very large, and there is space at any time to upload, and it can never be used. of. Spatial flexibility and time flexibility, which we often call the flexibility of cloud computing. The problem of solving this flexibility has gone through a long period of development. 3 physical equipment is not flexible The first phase is the physical device period. Customers need a computer during this period, and we buy one in the data center. Physical devices are of course more and more cattle, such as servers, memory is a hundred G memory; for example, network devices, a port can have a bandwidth of tens of G or even hundreds of G; for example, storage, at least PB level in the data center ( One P is 1000 T and one T is 1000 G). However, physical devices cannot achieve great flexibility: The first is that it lacks time flexibility. I can't reach when I want to be. For example, if you buy a server or buy a computer, you must have time to purchase it. If suddenly the user tells a cloud vendor that he wants to open a computer and use a physical server, it is difficult to purchase at the time. A good relationship with a supplier may take up to a week, and a general relationship with a supplier may require a month of purchase. The user waited for a long time before the computer was in place, and the user had to log in and slowly start deploying his own application. Time flexibility is very poor. Second is its spatial flexibility. For example, the above users need a very small computer, but now there is such a small model computer? Can not meet the user as long as a G memory is 80G hard drive, go buy a small machine. But if you buy a big one, you will need to collect more money from the user because the computer is big. However, the user needs to use only a small amount, so it is very embarrassing to pay more. 4 virtualization is much more flexible Someone will find a way. The first method is virtualization. Isn't the user a small computer? The physical devices in the data center are very powerful. I can virtualize a small piece from the physical CPU, memory, and hard disk to the customer, and can also virtualize a small piece to other customers. Each customer can only see the small piece of their own, but in fact each customer uses a small piece of the entire large device. The virtualization technology makes the computers of different customers appear to be isolated. That is, I looked like this plate is mine. You look at this plate is yours, but the actual situation may be that my 10G and your 10G are on the same large and large storage. And if the physical devices are ready in advance, the virtualization software virtualizes a computer very fast and can be solved in a matter of minutes. So to create a computer on any cloud, it will come out in a few minutes, that's the reason. This spatial flexibility and time flexibility are basically solved. 5 virtual world's earning and feelings In the virtualization phase, the most bullish company is VMware. It is a company that implements virtualization technology earlier and can virtualize computing, networking, and storage. This company is very good, the performance is very good, the virtualization software is selling very well, making a lot of money, and later let EMC (the world's top 500, the first brand of storage manufacturers) to buy. But there are still many people in the world who have feelings, especially in the programmers. What do people with emotions like to do? Open source. There are many closed-source sources in the world, and the source is the source code. In other words, a certain software is doing well, everyone loves to use it, but the code of this software is closed by me, only my company knows, others don't know. If other people want to use this software, they have to pay me, this is called closed source. But there are always some big cows in the world who can't get used to money and let a family earn. The big cows think that you will know me this technology; you can develop it, I can. I developed it is not to collect money, the code is taken out and shared with everyone, who can use it all over the world, all people can enjoy the benefits, this is called open source. For example, the recent Tim Berners-Lee is a very affectionate person. In 2017, he won the 2016 Turing Award for "inventing the World Wide Web, the first browser, and the basic protocols and algorithms that allowed the World Wide Web to expand." The Turing Award is the Nobel Prize in the computer world. However, his most admirable is that he freely contributed the World Wide Web, which is our common WWW technology, to the world for free. All of our current online behaviors should be thanked for his credit. If he uses this technology to collect money, he should be almost as rich as Bill Gates. There are many examples of open source and closed source: For example, in the world of closed source, there is Windows. Everyone has to pay for Microsoft with Windows; Linux appears in the open source world. Bill Gates made a lot of money by relying on closed-source software such as Windows and Office. It is called the world's richest man, and Daniel has developed another operating system, Linux. Many people may not have heard of Linux. Many programs running on the back-end server are on Linux. For example, everyone enjoys double eleven, whether it is Taobao, Jingdong, Koala... The system that supports the double eleven snaps is running. On Linux. If there is Apple, there is Android. Apple's market value is high, but the Apple system code we can't see. So there is a big cow wrote the Android mobile phone operating system. So you can see almost all other mobile phone manufacturers, which are loaded with Android. The reason is that the Apple system is not open source, and Android can be used by everyone. The same is true for virtualization software. With VMware, this software is very expensive. Then there are two open source virtualization software written by Daniel. One is called Xen and the other is called KVM. If you don't do technology, you can ignore these two names, but you will mention it later. 6 virtualized semi-automatic and fully automatic cloud computing To say that virtualization software solves the problem of flexibility is not entirely correct. Because virtualization software generally creates a virtual computer, it is necessary to manually specify which physical computer the virtual computer is placed on. This process may also require more complex manual configurations. So using VMware's virtualization software, you need to test a very good certificate, and the person who can get this certificate, the salary is quite high, but also the complexity. Therefore, the cluster size of physical machines that can only be managed by virtualization software is not particularly large, generally in the scale of a dozen, dozens, and hundreds. This aspect will affect time flexibility: although the time to virtualize a computer is very short, as the size of the cluster expands, the process of manual configuration becomes more and more complex and time consuming. On the other hand, it also affects space flexibility: when the number of users is large, the size of this cluster is far less than how much it wants. It is likely that this resource will soon be used up and it will have to be purchased. Therefore, as the size of the cluster grows larger, it is basically thousands of starts, tens of thousands, or even tens of millions. If you check BAT, including Netease, Google, Amazon, the number of servers is scary. It is almost impossible for so many machines to rely on people to choose a location to put this virtualized computer and configure it accordingly. It still needs a machine to do this. People have invented a variety of algorithms to do this, the name of the algorithm is called Scheduler. Generally speaking, there is a dispatch center. Thousands of machines are in a pool. No matter how many CPUs, CPUs, and hard disks the user needs, the dispatch center will automatically find a place in the big pool to meet the needs of users. Start the virtual computer and configure it, the user can use it directly. At this stage we call pooling or clouding. At this stage, it can be called cloud computing. Before that, it can only be called virtualization. 7 cloud computing private and public There are two types of cloud computing: one is a private cloud, the other is a public cloud, and some people connect a private cloud to a public cloud as a hybrid cloud. Private Cloud: Deploy the virtualized and clouded software in someone else's data center. Users who use private clouds tend to have a lot of money, buy their own space to build a computer room, buy a server themselves, and then let cloud vendors deploy themselves. In addition to virtualization, VMware also launched cloud computing products and made a lot of money in the private cloud market. Public cloud: Deploying virtualization and cloud software in the cloud vendor's own data center, users do not need a lot of investment, just register an account, you can click on a web page to create a virtual computer. For example, AWS is the public cloud of Amazon; for example, Alibaba Cloud, Tencent Cloud, and Netease Cloud in China. Why does Amazon want to be a public cloud? We know that Amazon was originally a relatively large e-commerce company abroad. When it is doing e-commerce, it will definitely encounter a scenario similar to the double eleven: at a certain moment, everyone rushes to buy things. When everyone rushes to buy something, the time flexibility and spatial flexibility of the cloud are especially needed. Because it can't always prepare all the resources, it's too wasteful. But you can't be prepared without anything, watching so many users of the Double Eleven want to buy things. Therefore, when it is necessary to double eleven, a large number of virtual computers are created to support the e-commerce application. After the double eleven, these resources are released and dried up. So Amazon needs a cloud platform. However, commercial virtualization software is too expensive, and Amazon can't give all the money it earns from e-commerce to virtualization vendors. So Amazon developed a set of its own cloud software based on open source virtualization technology, Xen or KVM as described above. I did not expect that after the Amazon, the caller will become more and more cattle, and the cloud platform will become more and more cattle. Because its cloud platform needs to support its own e-commerce application; traditional cloud computing vendors are mostly from IT vendors, and almost no applications, so Amazon's cloud platform is more friendly to applications and rapidly develops into the first brand of cloud computing. And made a lot of money. Before Amazon announced its cloud computing platform earnings report, people speculated that Amazon e-commerce makes money, and the cloud also makes money? Later, when the financial report was published, it was found that it was not ordinary to make money. Last year alone, Amazon AWS had revenues of $12.2 billion and operating profit of $3.1 billion. 8 cloud computing to make money and feelings The first Amazon in the public cloud is very cool, and the second Rackspace is just fine. No way, this is the cruelty of the Internet industry, and most of the winners are eating. So if the second place is not in the cloud computing industry, many people may have never heard of it. The second place is like, what can I do without the boss? Open source. As mentioned above, although Amazon uses open source virtualization technology, the cloud code is closed source. Many companies that want to do and can't do cloud computing platforms can only watch Amazon's big money. Rackspace puts the source code open, and the whole industry can work together to make the platform better and better. The brothers will work together and fight with the boss. So Rackspace and NASA co-founded the open source software OpenStack, as shown in the above figure, the architecture diagram of OpenStack, not the cloud computing industry does not need to understand this picture, but can see three keywords: Compute computing, Networking network, Storage storage. It is also a cloud management platform for computing, networking and storage. Of course, the second-place technology is also very good. With OpenStack, it’s really like Rackspace thinks. All the big companies that want to be cloud are crazy. You can imagine all the big IT companies like IBM: Hewlett-Packard, Dell, Huawei, Lenovo, etc. are crazy. Everyone wants to do the cloud platform. I watched Amazon and VMware make so much money. I couldn’t help but look at it. It seems that the difficulty is quite big. Now, with such an open source cloud platform OpenStack, all IT vendors have joined the community, contributed to this cloud platform, packaged into their own products, and sold together with their own hardware devices. Some have done private clouds, some have done public clouds, and OpenStack has become the de facto standard for open source cloud platforms. 9IaaS, resource level flexibility As OpenStack technology becomes more mature, the scale of management can be larger and larger, and multiple OpenStack clusters can be deployed in multiple sets. For example, a set of deployment in Beijing, two sets of deployment in Hangzhou, and a set of deployment in Guangzhou will be followed by unified management. This way the whole scale is even bigger. At this scale, for the perception of ordinary users, it is basically possible to know what to do and what to expect. Or take the cloud disk example, each user cloud disk is allocated 5T or even more space, if there are 100 million people, how much space is added. In fact, the mechanism behind it is this: to allocate your space, you may only use a few of them, for example, it assigns you 5 T, such a large space is only what you see, not really For you, you actually only used 50 G, then the real one is 50 G. As your files are continuously uploaded, more and more space will be allocated to you. When everyone uploads and the cloud platform is almost full (for example, 70%), it will purchase more servers and expand the resources behind it. This is transparent to the user and cannot be seen. From the perspective of feeling, the flexibility of cloud computing is realized. In fact, it is a bit like a bank. It gives the depositors the feeling of when to withdraw money. As long as they do not run at the same time, the bank will not be embarrassed. 10 summary At this stage, cloud computing basically realizes time flexibility and space flexibility; it realizes the flexibility of computing, network, and storage resources. Computing, networking, and storage are often referred to as infrastructure Infranstracture, so the flexibility at this stage is called resource-level resiliency. The cloud platform for managing resources, we call infrastructure services, is the IaaS (Infranstracture As A Service) we often hear. Second, cloud computing not only manages resources, but also applies applications. With IaaS, is it enough to achieve flexibility at the resource level? Obviously not, there is flexibility at the application level. Here is an example: For example, to realize the application of an e-commerce, ten machines are enough, and the double eleven needs one hundred. You may find it very easy. With IaaS, you can create 90 new machines. However, 90 machines were created empty, and the e-commerce application was not put on. It only allowed the company's operation and maintenance personnel to get one and one, and it took a long time to install. Although the resource level is flexible, there is no flexibility in the application layer, and flexibility is not enough. Is there a way to solve this problem? People have added a layer on top of the IaaS platform to manage the application flexibility of resources. This layer is often called PaaS (Platform As A Service). This layer is often difficult to understand, roughly divided into two parts: some of the author called "automatic installation of your own application", some of the author called "universal applications do not need to install." Automatic installation of your own application: For example, the e-commerce application is developed by you, except for yourself, others do not know how to install it. Like the e-commerce application, you need to configure Alipay or WeChat account to install, so that when someone else buys something on your e-commerce, the money paid is in your account. No one knows you except you. So the installation process platform can't help, but it can help you automate, you need to do some work, and integrate your configuration information into the automated installation process. For example, in the above example, the 90 machines newly created by the Double Eleven are empty. If a tool can be provided and the e-commerce application can be automatically installed on the new 90 machines, the real flexibility at the application level can be achieved. . For example, Puppet, Chef, Ansible, and Cloud Foundary can do this. The latest container technology, Docker, can do this better. Universal applications do not need to be installed: the so-called general-purpose applications generally refer to some complexities, but everyone is using them, such as databases. Almost all applications use databases, but database software is standard. Although installation and maintenance are more complicated, they are the same regardless of the installation. Such an application can be placed on the interface of the cloud platform by an application that becomes a standard PaaS layer. When the user needs a database, one point comes out and the user can use it directly. Someone asked, since the installation is the same, then I am coming, I don't need to spend money to buy on the cloud platform. Of course not, the database is a very difficult thing, the company Oracle can make so much money by relying on the database. Buying Oracle also costs a lot of money. However, most cloud platforms will provide an open source database such as MySQL, which is open source, and the money does not need to spend so much. But to maintain this database, you need to hire a large team. If the database can be optimized to support the double eleven, it will not be able to get it in a year or two. For example, if you are a bicycle, there is no need to recruit a very large database team to do this. The cost is too high. It should be handed over to the cloud platform to do this. Professional things are done by professional people. The platform is dedicated to hundreds of people to maintain this system, you only need to focus on your cycling application. Either it is deployed automatically or not deployed. In general, you have to worry about the application layer. This is the important role of the PaaS layer. Although the scripting method can solve the deployment problem of your own application, different environments are very different. A script often runs correctly in one environment, and it is not correct in another environment. And the container is better able to solve this problem. The container is a Container, and the Container is another container. In fact, the idea of ​​the container is to become a container for software delivery. The characteristics of the container: one is the package, and the other is the standard. In the era of no container, it is assumed that the goods will be transported from A to B, and three terminals will be passed in the middle and three times. Every time you have to unload the cargo, it will be put up, and then put on the boat and re-arranged. Therefore, in the absence of a container, each time the ship is changed, the crew must stay on the shore for a few days to go. With the container, all the goods are packed together, and the dimensions of the containers are all the same, so every time the ship is changed, the whole box can be moved, the hour level can be completed, and the crew no longer has to go ashore for a long time. It is. This is the application of the two characteristics of container "package" and "standard" in life. So how does the container package the application? Still have to learn the container. First of all, there must be a closed environment to enclose the goods so that the goods do not interfere with each other and are isolated from each other, so that loading and unloading is convenient. Fortunately, the LXC technology in Ubuntu can do this long ago. The closed environment mainly uses two technologies, one is the technology that seems to be isolated, called Namespace, that is, the application in each Namespace sees different IP addresses, user spaces, process numbers, and so on. The other is to use isolation technology, called Cgroups, which means that the whole machine has a lot of CPU and memory, and an application can only use some of them. The so-called mirror image is the moment when you weld the container, save the state of the container, just like Sun Wukong said: "fix", the container is set at that moment, and then save the state of this moment into a series of documents. The format of these files is standard, and anyone who sees them can restore the moment that was fixed at the time. The process of restoring a mirror to a runtime (that is, reading an image file and restoring that moment) is the process of running the container. With the container, the PaaS layer becomes fast and elegant for the automatic deployment of the user's own application. Third, big data embraces cloud computing A complex and common application in the PaaS layer is the big data platform. How is big data stepping into cloud computing step by step? 1 data is small and contains wisdom At the beginning, this big data is not big. How much data did you have? Now everyone is going to read e-books and watch the news online. In the post-80s, when we were young, the amount of information was not so big. Just look at books and look at newspapers. How many words do you have in a week's newspapers? If you are not in a big city, there is not a few bookshelves in the library of an ordinary school. Later, with the arrival of information technology, information will become more and more. First, let's take a look at the data in big data. There are three types, one is structured data, one is unstructured data, and the other is semi-structured data. Structured data: There is a fixed format and a limited length of data. For example, the completed form is structured data, nationality: People's Republic of China, nationality: Han, gender: male, which is called structured data. Unstructured data: There are more and more unstructured data now, that is, data of variable length and no fixed format, such as web pages, sometimes very long, sometimes a few words are gone; for example, voice, video are non- Structured data. Semi-structured data: It is in the form of some XML or HTML. It may not be known if it is not engaged in technology, but it does not matter. In fact, the data itself is not useful and must be processed. For example, if you run a bracelet every day, it is also the data collected. So many web pages on the Internet are also data. We call it Data. The data itself is of no use, but the data contains a very important thing called Information. The data is very messy and can be called information after being combed and cleaned. Information will contain many rules. We need to summarize the rules from the information, called knowledge, and knowledge changes fate. The information is a lot, but some people see that the information is equivalent to white, but some people have seen the future of e-commerce from the information, some people have seen the future of the live broadcast, so people will be cattle. If you don't extract knowledge from the information, you can only see a circle of friends in the Internet. With knowledge, and then use this knowledge to apply to actual combat, some people will do very well, this thing is called Intelligence. Knowledge is not necessarily wise. For example, many scholars are very knowledgeable. What has happened can be analyzed from all angles. But when it is done, it can't be transformed into wisdom. The reason why many entrepreneurs are great is to apply the knowledge gained to practice and finally do a lot of business. So the application of data is divided into four steps: data, information, knowledge, and wisdom. The final stage is what many businesses want. You see that I have collected so much data, can I use this data to help me make the next decision and improve my product. For example, when a user watches a video, an advertisement pops up next to it, which is exactly what he wants to buy; when the user listens to music, he also recommends other music that he really wants to listen to. The user randomly clicks the mouse on my application or website. The input text is data for me. I just want to extract some of them, guide the practice, form wisdom, and let the user fall into my application. I didn't want to leave when I went to my network. I kept buying my hands and kept buying. Many people say that I have to break the net for the double eleven. My wife is constantly buying and buying on it. I bought A and recommended B. My wife said, "Oh, B is what I like, my husband wants to buy." You said how this program is so ox, so smart, I know my wife better than me, how is this thing done? 2 How does data sublimate into wisdom The processing of the data is divided into several steps, and it will be wise at the end. The first step is called the collection of data. There must be data first, and there are two ways to collect the data: The first way is to take it. The point of professional point is to grab or crawl. For example, the search engine does this: it downloads all the information on the Internet to its data center, and then you can search it out. For example, when you go to search, the result will be a list. Why is this list in the search engine company? It's because he has taken the data down, but if you click on it, the website is not in the search engine. For example, Sina has a news, you use Baidu to search out, when you don't order, that page is in the Baidu data center, a little out of the page is in the Sina data center. The second way is to push, there are many terminals that can help me collect data. For example, the Xiaomi bracelet can upload your daily running data, heartbeat data, and sleep data to the data center. The second step is the transfer of data. It is usually done in a queue, because the amount of data is really too large, and the data must be processed to be useful. Can be handled systematically, but had to queue up and deal with it slowly. The third step is the storage of data. Now the data is money, and mastering the data is equivalent to mastering the money. Otherwise, how does the website know what you want to buy? Just because it has data on your historical transactions, this information can not be given to others, it is very valuable, so it needs to be stored. The fourth step is the processing and analysis of the data. The data stored above is the original data, the original data is mostly chaotic, there is a lot of junk data in it, so it needs to be cleaned and filtered to get some high quality data. For high-quality data, you can analyze it to classify the data, or discover the relationship between the data and get the knowledge. For example, the story of the rumored Wal-Mart beer and diapers is based on the analysis of people's purchase data. It is found that when men buy diapers, they will buy beer at the same time, thus discovering the relationship between beer and diapers. Knowledge, and then applied to practice, get the wisdom of the beer and diaper counters very close. The fifth step is the retrieval and mining of data. Search is search, the so-called foreign affairs is not determined to ask Google, and it is not necessary to ask Baidu. Both the internal and external search engines put the analyzed data into the search engine, so when people want to find information, they will have a search. The other is mining. Just searching out can no longer satisfy people's requirements. It is also necessary to dig out the relationship from the information. For example, in financial search, when searching for a company's stock, should the company's executives be excavated? If you only searched out the company's stock and found it to be particularly good, then you went to buy it. In fact, its executive issued a statement that was very unfavorable to the stock and fell the next day. Doesn't it harm the majority of investors? Therefore, it is very important to mine the relationships in the data through various algorithms to form a knowledge base. 3 big data era, everyone collects firewood high When the amount of data is small, few machines can solve it. Slowly, when the amount of data is getting bigger and bigger, and the most cattle servers can't solve the problem, what should I do? At this time, it is necessary to aggregate the power of multiple machines. Everyone works together to get the matter together. For the collection of data: As far as IoT is concerned, thousands of detection devices are deployed outside, and a large amount of data such as temperature, humidity, monitoring, and power are collected. For the search engine of the Internet webpage, the entire Internet is required. All pages are downloaded. Obviously, one machine can't do it. It needs multiple machines to form a network crawler system. Each machine downloads a part and works at the same time to download a large number of web pages in a limited time. For the transmission of data: a queue in memory will be smashed by a large amount of data, so a distributed queue based on the hard disk is generated, so that the queue can be transmitted simultaneously by multiple machines, as long as your data volume is large, as long as my queue Enough, the pipe is thick enough to hold it. For the storage of data: the file system of a machine is definitely not put down, so a large distributed file system is needed to do this, and the hard disk of multiple machines is made into a large file system. For the analysis of data: it may be necessary to decompose, count, and summarize a large amount of data. A machine may not be able to handle it. So there is a distributed computing method, which divides a large amount of data into small parts, each machine processes a small portion, and multiple machines process in parallel, which can be completed quickly. For example, the famous Terasort sorts data of 1 TB, which is equivalent to 1000G. If it is processed by a single machine, it will take several hours, but parallel processing will be completed in 209 seconds. So what is called big data? To put it bluntly, it’s just that one machine can’t finish, everyone is doing it together. However, as the amount of data grows larger, many small companies need to process quite a lot of data. What can these small companies do without so many machines? 4 big data needs cloud computing, cloud computing needs big data Having said that, everyone thinks about cloud computing. When you want to do these things, you need a lot of machines to do it. It really depends on when and when you want it. For example, the financial situation of big data analysis company may be analyzed once a week. If you want to put this hundred machines or one thousand machines in there, it is very wasteful to use it once a week. When you can count, do you take out the thousands of machines; when it’s not, let the thousands of machines do other things? Who can do this? Only cloud computing can provide resource layer flexibility for big data operations. Cloud computing also deploys big data on its PaaS platform as a very, very important general-purpose application. Because the big data platform can make multiple machines do one thing together, this thing is not something that ordinary people can develop, nor is it that ordinary people can play it. How can they hire dozens of hundreds of people to play this? So just like a database, you still need a bunch of professional people to play with this stuff. Now there are basically big data solutions on the public cloud. When a small company needs a big data platform, there is no need to purchase a thousand machines. As long as it is on the public cloud, this thousand machines are out, and The big data platform that has been deployed above, just put the data into it and you can do it. Cloud computing requires big data, big data requires cloud computing, and the two are combined. Fourth, artificial intelligence embraces big data 1 When can the machine understand the heart? 虽说有了大数æ®ï¼Œäººçš„欲望å´ä¸èƒ½å¤Ÿæ»¡è¶³ã€‚虽说在大数æ®å¹³å°é‡Œé¢æœ‰æœç´¢å¼•æ“Žè¿™ä¸ªä¸œè¥¿ï¼Œæƒ³è¦ä»€ä¹ˆä¸œè¥¿ä¸€æœå°±å‡ºæ¥äº†ã€‚但也å˜åœ¨è¿™æ ·çš„情况:我想è¦çš„东西ä¸ä¼šæœï¼Œè¡¨è¾¾ä¸å‡ºæ¥ï¼Œæœç´¢å‡ºæ¥çš„åˆä¸æ˜¯æˆ‘想è¦çš„。 例如音ä¹è½¯ä»¶æŽ¨è了一首æŒï¼Œè¿™é¦–æŒæˆ‘没å¬è¿‡ï¼Œå½“然ä¸çŸ¥é“åå—,也没法æœã€‚但是软件推è给我,我的确喜欢,这就是æœç´¢åšä¸åˆ°çš„事情。当人们使用这ç§åº”用时,会å‘现机器知é“我想è¦ä»€ä¹ˆï¼Œè€Œä¸æ˜¯è¯´å½“我想è¦æ—¶ï¼ŒåŽ»æœºå™¨é‡Œé¢æœç´¢ã€‚这个机器真åƒæˆ‘的朋å‹ä¸€æ ·æ‡‚我,这就有点人工智能的æ„æ€äº†ã€‚ 人们很早就在想这个事情了。最早的时候,人们想象,è¦æ˜¯æœ‰ä¸€å µå¢™ï¼Œå¢™åŽé¢æ˜¯ä¸ªæœºå™¨ï¼Œæˆ‘给它说è¯ï¼Œå®ƒå°±ç»™æˆ‘回应。如果我感觉ä¸å‡ºå®ƒé‚£è¾¹æ˜¯äººè¿˜æ˜¯æœºå™¨ï¼Œé‚£å®ƒå°±çœŸçš„是一个人工智能的东西了。 2让机器å¦ä¼šæŽ¨ç† 怎么æ‰èƒ½åšåˆ°è¿™ä¸€ç‚¹å‘¢ï¼Ÿäººä»¬å°±æƒ³ï¼šæˆ‘首先è¦å‘Šè¯‰è®¡ç®—机人类的推ç†çš„èƒ½åŠ›ã€‚ä½ çœ‹äººé‡è¦çš„是什么?人和动物的区别在什么?就是能推ç†ã€‚è¦æ˜¯æŠŠæˆ‘这个推ç†çš„èƒ½åŠ›å‘Šè¯‰æœºå™¨ï¼Œè®©æœºå™¨æ ¹æ®ä½ çš„æ问,推ç†å‡ºç›¸åº”的回ç”ï¼Œè¿™æ ·å¤šå¥½ï¼Ÿ 其实目å‰äººä»¬æ…¢æ…¢åœ°è®©æœºå™¨èƒ½å¤Ÿåšåˆ°ä¸€äº›æŽ¨ç†äº†ï¼Œä¾‹å¦‚è¯æ˜Žæ•°å¦å…¬å¼ã€‚这是一个éžå¸¸è®©äººæƒŠå–œçš„一个过程,机器竟然能够è¯æ˜Žæ•°å¦å…¬å¼ã€‚但慢慢åˆå‘çŽ°å…¶å®žè¿™ä¸ªç»“æžœä¹Ÿæ²¡æœ‰é‚£ä¹ˆä»¤äººæƒŠå–œã€‚å› ä¸ºå¤§å®¶å‘现了一个问题:数å¦å…¬å¼éžå¸¸ä¸¥è°¨ï¼ŒæŽ¨ç†è¿‡ç¨‹ä¹Ÿéžå¸¸ä¸¥è°¨ï¼Œè€Œä¸”æ•°å¦å…¬å¼å¾ˆå®¹æ˜“拿机器æ¥è¿›è¡Œè¡¨è¾¾ï¼Œç¨‹åºä¹Ÿç›¸å¯¹å®¹æ˜“表达。 然而人类的è¯è¨€å°±æ²¡è¿™ä¹ˆç®€å•äº†ã€‚æ¯”å¦‚ä»Šå¤©æ™šä¸Šï¼Œä½ å’Œä½ å¥³æœ‹å‹çº¦ä¼šï¼Œä½ 女朋å‹è¯´ï¼šå¦‚æžœä½ æ—©æ¥ï¼Œæˆ‘没æ¥ï¼›ä½ ç‰ç€ï¼Œå¦‚果我早æ¥ï¼›ä½ 没æ¥ï¼Œä½ ç‰ç€ï¼è¿™ä¸ªæœºå™¨å°±æ¯”较难ç†è§£äº†ï¼Œä½†äººéƒ½æ‡‚ã€‚æ‰€ä»¥ä½ å’Œå¥³æœ‹å‹çº¦ä¼šï¼Œæ˜¯ä¸æ•¢è¿Ÿåˆ°çš„。 3教给机器知识 å› æ¤ï¼Œä»…ä»…å‘Šè¯‰æœºå™¨ä¸¥æ ¼çš„æŽ¨ç†æ˜¯ä¸å¤Ÿçš„,还è¦å‘Šè¯‰æœºå™¨ä¸€äº›çŸ¥è¯†ã€‚但告诉机器知识这个事情,一般人å¯èƒ½å°±åšä¸æ¥äº†ã€‚å¯èƒ½ä¸“家å¯ä»¥ï¼Œæ¯”如è¯è¨€é¢†åŸŸçš„专家或者财ç»é¢†åŸŸçš„专家。 è¯è¨€é¢†åŸŸå’Œè´¢ç»é¢†åŸŸçŸ¥è¯†èƒ½ä¸èƒ½è¡¨ç¤ºæˆåƒæ•°å¦å…¬å¼ä¸€æ ·ç¨å¾®ä¸¥æ ¼ç‚¹å‘¢ï¼Ÿä¾‹å¦‚è¯è¨€ä¸“家å¯èƒ½ä¼šæ€»ç»“出主谓宾定状补这些è¯æ³•è§„则,主è¯åŽé¢ä¸€å®šæ˜¯è°“è¯ï¼Œè°“è¯åŽé¢ä¸€å®šæ˜¯å®¾è¯ï¼Œå°†è¿™äº›æ€»ç»“出æ¥ï¼Œå¹¶ä¸¥æ ¼è¡¨è¾¾å‡ºæ¥ä¸ä¹…行了å—? åŽæ¥å‘现这个ä¸è¡Œï¼Œå¤ªéš¾æ€»ç»“了,è¯è¨€è¡¨è¾¾åƒå˜ä¸‡åŒ–。就拿主谓宾的例å,很多时候在å£è¯é‡Œé¢å°±çœç•¥äº†è°“è¯ï¼Œåˆ«äººé—®ï¼šä½ è°å•Šï¼Ÿæˆ‘回ç”ï¼šæˆ‘åˆ˜è¶…ã€‚ä½†ä½ ä¸èƒ½è§„定在è¯éŸ³è¯ä¹‰è¯†åˆ«æ—¶ï¼Œè¦æ±‚对ç€æœºå™¨è¯´æ ‡å‡†çš„书é¢è¯ï¼Œè¿™æ ·è¿˜æ˜¯ä¸å¤Ÿæ™ºèƒ½ï¼Œå°±åƒç½—永浩在一次演讲ä¸è¯´çš„é‚£æ ·ï¼Œæ¯æ¬¡å¯¹ç€æ‰‹æœºï¼Œç”¨ä¹¦é¢è¯è¯´ï¼šè¯·å¸®æˆ‘呼å«æŸæŸæŸï¼Œè¿™æ˜¯ä¸€ä»¶å¾ˆå°´å°¬çš„事情。 人工智能这个阶段å«åšä¸“家系统。专家系统ä¸æ˜“æˆåŠŸï¼Œä¸€æ–¹é¢æ˜¯çŸ¥è¯†æ¯”较难总结,å¦ä¸€æ–¹é¢æ€»ç»“出æ¥çš„çŸ¥è¯†éš¾ä»¥æ•™ç»™è®¡ç®—æœºã€‚å› ä¸ºä½ è‡ªå·±è¿˜è¿·è¿·ç³Šç³Šï¼Œè§‰å¾—ä¼¼ä¹Žæœ‰è§„å¾‹ï¼Œå°±æ˜¯è¯´ä¸å‡ºæ¥ï¼Œåˆæ€Žä¹ˆèƒ½å¤Ÿé€šè¿‡ç¼–程教给计算机呢? 4算了,教ä¸ä¼šä½ 自己å¦å§ 于是人们想到:机器是和人完全ä¸ä¸€æ ·çš„物ç§ï¼Œå¹²è„†è®©æœºå™¨è‡ªå·±å¦ä¹ 好了。 机器怎么å¦ä¹ 呢?既然机器的统计能力这么强,基于统计å¦ä¹ ,一定能从大é‡çš„æ•°å—ä¸å‘现一定的规律。 其实在娱ä¹åœˆæœ‰å¾ˆå¥½çš„一个例å,å¯è§ä¸€èˆ¬ï¼š 有一ä½ç½‘å‹ç»Ÿè®¡äº†çŸ¥åæŒæ‰‹åœ¨å¤§é™†å‘行的9 å¼ ä¸“è¾‘ä¸117 首æŒæ›²çš„æŒè¯ï¼ŒåŒä¸€è¯è¯åœ¨ä¸€é¦–æŒå‡ºçŽ°åªç®—一次,形容è¯ã€åè¯å’ŒåŠ¨è¯çš„å‰åå如下表所示(è¯è¯åŽé¢çš„æ•°å—是出现的次数): 如果我们éšä¾¿å†™ä¸€ä¸²æ•°å—,然åŽæŒ‰ç…§æ•°ä½ä¾æ¬¡åœ¨å½¢å®¹è¯ã€åè¯å’ŒåŠ¨è¯ä¸å–出一个è¯ï¼Œè¿žåœ¨ä¸€èµ·ä¼šæ€Žä¹ˆæ ·å‘¢ï¼Ÿ 例如å–圆周率3.1415926,对应的è¯è¯æ˜¯ï¼šåšå¼ºï¼Œè·¯ï¼Œé£žï¼Œè‡ªç”±ï¼Œé›¨ï¼ŒåŸ‹ï¼Œè¿·æƒ˜ã€‚ç¨å¾®è¿žæŽ¥å’Œæ¶¦è‰²ä¸€ä¸‹ï¼š åšå¼ºçš„å©å, ä¾ç„¶å‰è¡Œåœ¨è·¯ä¸Šï¼Œ å¼ å¼€ç¿…è†€é£žå‘自由, 让雨水埋葬他的迷惘。 是ä¸æ˜¯æœ‰ç‚¹æ„Ÿè§‰äº†ï¼Ÿå½“然,真æ£åŸºäºŽç»Ÿè®¡çš„å¦ä¹ 算法比这个简å•çš„统计å¤æ‚得多。 然而统计å¦ä¹ 比较容易ç†è§£ç®€å•çš„相关性:例如一个è¯å’Œå¦ä¸€ä¸ªè¯æ€»æ˜¯ä¸€èµ·å‡ºçŽ°ï¼Œä¸¤ä¸ªè¯åº”è¯¥æœ‰å…³ç³»ï¼›è€Œæ— æ³•è¡¨è¾¾å¤æ‚的相关性。并且统计方法的公å¼å¾€å¾€éžå¸¸å¤æ‚,为了简化计算,常常åšå‡ºå„ç§ç‹¬ç«‹æ€§çš„å‡è®¾ï¼Œæ¥é™ä½Žå…¬å¼çš„计算难度,然而现实生活ä¸ï¼Œå…·æœ‰ç‹¬ç«‹æ€§çš„事件是相对较少的。 5æ¨¡æ‹Ÿå¤§è„‘çš„å·¥ä½œæ–¹å¼ äºŽæ˜¯äººç±»å¼€å§‹ä»Žæœºå™¨çš„ä¸–ç•Œï¼Œåæ€äººç±»çš„世界是怎么工作的。 人类的脑å里é¢ä¸æ˜¯å˜å‚¨ç€å¤§é‡çš„规则,也ä¸æ˜¯è®°å½•ç€å¤§é‡çš„统计数æ®ï¼Œè€Œæ˜¯é€šè¿‡ç¥žç»å…ƒçš„触å‘实现的,æ¯ä¸ªç¥žç»å…ƒæœ‰ä»Žå…¶å®ƒç¥žç»å…ƒçš„输入,当接收到输入时,会产生一个输出æ¥åˆºæ¿€å…¶å®ƒç¥žç»å…ƒã€‚于是大é‡çš„神ç»å…ƒç›¸äº’å应,最终形æˆå„ç§è¾“出的结果。 例如当人们看到美女瞳å”会放大,ç»ä¸æ˜¯å¤§è„‘æ ¹æ®èº«æ比例进行规则判æ–,也ä¸æ˜¯å°†äººç”Ÿä¸çœ‹è¿‡çš„所有的美女都统计一é,而是神ç»å…ƒä»Žè§†ç½‘膜触å‘到大脑å†å›žåˆ°çž³å”。在这个过程ä¸ï¼Œå…¶å®žå¾ˆéš¾æ€»ç»“出æ¯ä¸ªç¥žç»å…ƒå¯¹æœ€ç»ˆçš„结果起到了哪些作用,åæ£å°±æ˜¯èµ·ä½œç”¨äº†ã€‚ 于是人们开始用一个数å¦å•å…ƒæ¨¡æ‹Ÿç¥žç»å…ƒã€‚ 这个神ç»å…ƒæœ‰è¾“入,有输出,输入和输出之间通过一个公å¼æ¥è¡¨ç¤ºï¼Œè¾“å…¥æ ¹æ®é‡è¦ç¨‹åº¦ä¸åŒ(æƒé‡),影å“ç€è¾“出。 于是将n个神ç»å…ƒé€šè¿‡åƒä¸€å¼ 神ç»ç½‘ç»œä¸€æ ·è¿žæŽ¥åœ¨ä¸€èµ·ã€‚n这个数å—å¯ä»¥å¾ˆå¤§å¾ˆå¤§ï¼Œæ‰€æœ‰çš„神ç»å…ƒå¯ä»¥åˆ†æˆå¾ˆå¤šåˆ—,æ¯ä¸€åˆ—很多个排列起æ¥ã€‚æ¯ä¸ªç¥žç»å…ƒå¯¹äºŽè¾“入的æƒé‡å¯ä»¥éƒ½ä¸ç›¸åŒï¼Œä»Žè€Œæ¯ä¸ªç¥žç»å…ƒçš„å…¬å¼ä¹Ÿä¸ç›¸åŒã€‚å½“äººä»¬ä»Žè¿™å¼ ç½‘ç»œä¸è¾“入一个东西的时候,希望输出一个对人类æ¥è®²æ£ç¡®çš„结果。 例如上é¢çš„例å,输入一个写ç€2的图片,输出的列表里é¢ç¬¬äºŒä¸ªæ•°å—最大,其实从机器æ¥è®²ï¼Œå®ƒæ—¢ä¸çŸ¥é“输入的这个图片写的是2,也ä¸çŸ¥é“输出的这一系列数å—çš„æ„义,没关系,人知é“æ„义就å¯ä»¥äº†ã€‚æ£å¦‚对于神ç»å…ƒæ¥è¯´ï¼Œä»–们既ä¸çŸ¥é“视网膜看到的是美女,也ä¸çŸ¥é“çž³å”放大是为了看的清楚,åæ£çœ‹åˆ°ç¾Žå¥³ï¼Œçž³å”放大了,就å¯ä»¥äº†ã€‚ å¯¹äºŽä»»ä½•ä¸€å¼ ç¥žç»ç½‘络,è°ä¹Ÿä¸æ•¢ä¿è¯è¾“入是2,输出一定是第二个数å—最大,è¦ä¿è¯è¿™ä¸ªç»“果,需è¦è®ç»ƒå’Œå¦ä¹ 。毕竟看到美女而瞳å”放大也是人类很多年进化的结果。å¦ä¹ 的过程就是,输入大é‡çš„图片,如果结果ä¸æ˜¯æƒ³è¦çš„结果,则进行调整。 如何调整呢?就是æ¯ä¸ªç¥žç»å…ƒçš„æ¯ä¸ªæƒé‡éƒ½å‘ç›®æ ‡è¿›è¡Œå¾®è°ƒï¼Œç”±äºŽç¥žç»å…ƒå’Œæƒé‡å®žåœ¨æ˜¯å¤ªå¤šäº†ï¼Œæ‰€ä»¥æ•´å¼ 网络产生的结果很难表现出éžæ¤å³å½¼çš„结果,而是å‘ç€ç»“果微微地进æ¥ï¼Œæœ€ç»ˆèƒ½å¤Ÿè¾¾åˆ°ç›®æ ‡ç»“果。 当然,这些调整的ç–略还是éžå¸¸æœ‰æŠ€å·§çš„,需è¦ç®—法的高手æ¥ä»”细的调整。æ£å¦‚人类è§åˆ°ç¾Žå¥³ï¼Œçž³å”一开始没有放大到能看清楚,于是美女跟别人跑了,下次å¦ä¹ 的结果是瞳å”放大一点点,而ä¸æ˜¯æ”¾å¤§é¼»å”。 6没é“ç†ä½†åšå¾—到 å¬èµ·æ¥ä¹Ÿæ²¡æœ‰é‚£ä¹ˆæœ‰é“ç†ï¼Œä½†çš„确能åšåˆ°ï¼Œå°±æ˜¯è¿™ä¹ˆä»»æ€§ï¼ 神ç»ç½‘络的普é性定ç†æ˜¯è¿™æ ·è¯´çš„,å‡è®¾æŸä¸ªäººç»™ä½ æŸç§å¤æ‚奇特的函数,f(x): ä¸ç®¡è¿™ä¸ªå‡½æ•°æ˜¯ä»€ä¹ˆæ ·çš„,总会确ä¿æœ‰ä¸ªç¥žç»ç½‘络能够对任何å¯èƒ½çš„输入x,其值f(x)(或者æŸä¸ªèƒ½å¤Ÿå‡†ç¡®çš„近似)是神ç»ç½‘络的输出。 如果在函数代表ç€è§„律,也æ„味ç€è¿™ä¸ªè§„å¾‹æ— è®ºå¤šä¹ˆå¥‡å¦™ï¼Œå¤šä¹ˆä¸èƒ½ç†è§£ï¼Œéƒ½æ˜¯èƒ½é€šè¿‡å¤§é‡çš„神ç»å…ƒï¼Œé€šè¿‡å¤§é‡æƒé‡çš„调整,表示出æ¥çš„。 7人工智能的ç»æµŽå¦è§£é‡Š 这让我想到了ç»æµŽå¦ï¼ŒäºŽæ˜¯æ¯”较容易ç†è§£äº†ã€‚ 我们把æ¯ä¸ªç¥žç»å…ƒå½“æˆç¤¾ä¼šä¸ä»Žäº‹ç»æµŽæ´»åŠ¨çš„个体。于是神ç»ç½‘络相当于整个ç»æµŽç¤¾ä¼šï¼Œæ¯ä¸ªç¥žç»å…ƒå¯¹äºŽç¤¾ä¼šçš„输入,都有æƒé‡çš„调整,åšå‡ºç›¸åº”的输出,比如工资涨了ã€èœä»·æ¶¨äº†ã€è‚¡ç¥¨è·Œäº†ï¼Œæˆ‘应该怎么办ã€æ€Žä¹ˆèŠ±è‡ªå·±çš„钱。这里é¢æ²¡æœ‰è§„律么?肯定有,但是具体什么规律呢?很难说清楚。 基于专家系统的ç»æµŽå±žäºŽè®¡åˆ’ç»æµŽã€‚整个ç»æµŽè§„律的表示ä¸å¸Œæœ›é€šè¿‡æ¯ä¸ªç»æµŽä¸ªä½“的独立决ç–表现出æ¥ï¼Œè€Œæ˜¯å¸Œæœ›é€šè¿‡ä¸“家的高屋建瓴和远è§å“识总结出æ¥ã€‚但专家永远ä¸å¯èƒ½çŸ¥é“哪个城市的哪个街é“缺少一个å–甜豆è…脑的。 于是专家说应该产多少钢é“ã€äº§å¤šå°‘馒头,往往è·ç¦»äººæ°‘生活的真æ£éœ€æ±‚有较大的差è·ï¼Œå°±ç®—æ•´ä¸ªè®¡åˆ’ä¹¦å†™ä¸ªå‡ ç™¾é¡µï¼Œä¹Ÿæ— æ³•è¡¨è¾¾éšè—在人民生活ä¸çš„å°è§„律。 基于统计的å®è§‚调控就é 谱多了,æ¯å¹´ç»Ÿè®¡å±€éƒ½ä¼šç»Ÿè®¡æ•´ä¸ªç¤¾ä¼šçš„就业率ã€é€šèƒ€çŽ‡ã€GDPç‰æŒ‡æ ‡ã€‚è¿™äº›æŒ‡æ ‡å¾€å¾€ä»£è¡¨ç€å¾ˆå¤šå†…在规律,虽然ä¸èƒ½ç²¾ç¡®è¡¨è¾¾ï¼Œä½†æ˜¯ç›¸å¯¹é 谱。 然而基于统计的规律总结表达相对比较粗糙。比如ç»æµŽå¦å®¶çœ‹åˆ°è¿™äº›ç»Ÿè®¡æ•°æ®ï¼Œå¯ä»¥æ€»ç»“出长期æ¥çœ‹æˆ¿ä»·æ˜¯æ¶¨è¿˜æ˜¯è·Œã€è‚¡ç¥¨é•¿æœŸæ¥çœ‹æ˜¯æ¶¨è¿˜æ˜¯è·Œã€‚例如,如果ç»æµŽæ€»ä½“上扬,房价和股票应该都是涨的。但基于统计数æ®ï¼Œæ— 法总结出股票,物价的微å°æ³¢åŠ¨è§„律。 基于神ç»ç½‘络的微观ç»æµŽå¦æ‰æ˜¯å¯¹æ•´ä¸ªç»æµŽè§„律最最准确的表达,æ¯ä¸ªäººå¯¹äºŽè‡ªå·±åœ¨ç¤¾ä¼šä¸çš„输入进行å„自的调整,并且调整åŒæ ·ä¼šä½œä¸ºè¾“å…¥å馈到社会ä¸ã€‚想象一下股市行情细微的波动曲线,æ£æ˜¯æ¯ä¸ªç‹¬ç«‹çš„个体å„自ä¸æ–交易的结果,没有统一的规律å¯å¾ªã€‚ 而æ¯ä¸ªäººæ ¹æ®æ•´ä¸ªç¤¾ä¼šçš„输入进行独立决ç–,当æŸäº›å› ç´ ç»è¿‡å¤šæ¬¡è®ç»ƒï¼Œä¹Ÿä¼šå½¢æˆå®è§‚上统计性的规律,这也就是å®è§‚ç»æµŽå¦æ‰€èƒ½çœ‹åˆ°çš„。例如æ¯æ¬¡è´§å¸å¤§é‡å‘行,最åŽæˆ¿ä»·éƒ½ä¼šä¸Šæ¶¨ï¼Œå¤šæ¬¡è®ç»ƒåŽï¼Œäººä»¬ä¹Ÿå°±éƒ½å¦ä¼šäº†ã€‚ 8人工智能需è¦å¤§æ•°æ® 然而,神ç»ç½‘络包å«è¿™ä¹ˆå¤šçš„节点,æ¯ä¸ªèŠ‚点åˆåŒ…å«éžå¸¸å¤šçš„å‚数,整个å‚æ•°é‡å®žåœ¨æ˜¯å¤ªå¤§äº†ï¼Œéœ€è¦çš„计算é‡å®žåœ¨å¤ªå¤§ã€‚但没有关系,我们有大数æ®å¹³å°ï¼Œå¯ä»¥æ±‡èšå¤šå°æœºå™¨çš„力é‡ä¸€èµ·æ¥è®¡ç®—,就能在有é™çš„时间内得到想è¦çš„结果。 人工智能å¯ä»¥åšçš„事情éžå¸¸å¤šï¼Œä¾‹å¦‚å¯ä»¥é‰´åˆ«åžƒåœ¾é‚®ä»¶ã€é‰´åˆ«é»„色暴力文å—和图片ç‰ã€‚这也是ç»åŽ†äº†ä¸‰ä¸ªé˜¶æ®µçš„: 第一个阶段ä¾èµ–于关键è¯é»‘白åå•å’Œè¿‡æ»¤æŠ€æœ¯ï¼ŒåŒ…å«å“ªäº›è¯å°±æ˜¯é»„色或者暴力的文å—。éšç€è¿™ä¸ªç½‘络è¯è¨€è¶Šæ¥è¶Šå¤šï¼Œè¯ä¹Ÿä¸æ–地å˜åŒ–,ä¸æ–地更新这个è¯åº“就有点顾ä¸è¿‡æ¥ã€‚ 第二个阶段时,基于一些新的算法,比如说è´å¶æ–¯è¿‡æ»¤ç‰ï¼Œä½ ä¸ç”¨ç®¡è´å¶æ–¯ç®—法是什么,但是这个åå—ä½ åº”è¯¥å¬è¿‡ï¼Œè¿™ä¸ªä¸€ä¸ªåŸºäºŽæ¦‚率的算法。 第三个阶段就是基于大数æ®å’Œäººå·¥æ™ºèƒ½ï¼Œè¿›è¡Œæ›´åŠ 精准的用户画åƒå’Œæ–‡æœ¬ç†è§£å’Œå›¾åƒç†è§£ã€‚ 由于人工智能算法多是ä¾èµ–于大é‡çš„æ•°æ®çš„,这些数æ®å¾€å¾€éœ€è¦é¢å‘æŸä¸ªç‰¹å®šçš„领域(例如电商,邮箱)进行长期的积累,如果没有数æ®ï¼Œå°±ç®—有人工智能算法也白æ,所以人工智能程åºå¾ˆå°‘åƒå‰é¢çš„IaaSå’ŒPaaSä¸€æ ·ï¼Œå°†äººå·¥æ™ºèƒ½ç¨‹åºç»™æŸä¸ªå®¢æˆ·å®‰è£…ä¸€å¥—ï¼Œè®©å®¢æˆ·åŽ»ç”¨ã€‚å› ä¸ºç»™æŸä¸ªå®¢æˆ·å•ç‹¬å®‰è£…一套,客户没有相关的数æ®åšè®ç»ƒï¼Œç»“果往往是很差的。 但云计算厂商往往是积累了大é‡æ•°æ®çš„,于是就在云计算厂商里é¢å®‰è£…一套,暴露一个æœåŠ¡æŽ¥å£ï¼Œæ¯”如您想鉴别一个文本是ä¸æ˜¯æ¶‰åŠé»„色和暴力,直接用这个在线æœåŠ¡å°±å¯ä»¥äº†ã€‚è¿™ç§å½¢åŠ¿çš„æœåŠ¡ï¼Œåœ¨äº‘计算里é¢ç§°ä¸ºè½¯ä»¶å³æœåŠ¡ï¼ŒSaaS (Software AS A Service) 于是工智能程åºä½œä¸ºSaaSå¹³å°è¿›å…¥äº†äº‘计算。 五ã€åŸºäºŽä¸‰è€…关系的美好生活 终于云计算的三兄弟凑é½äº†ï¼Œåˆ†åˆ«æ˜¯IaaSã€PaaSå’ŒSaaS。所以一般在一个云计算平å°ä¸Šï¼Œäº‘ã€å¤§æ•°æ®ã€äººå·¥æ™ºèƒ½éƒ½èƒ½æ‰¾å¾—到。一个大数æ®å…¬å¸ï¼Œç§¯ç´¯äº†å¤§é‡çš„æ•°æ®ï¼Œä¼šä½¿ç”¨ä¸€äº›äººå·¥æ™ºèƒ½çš„算法æ供一些æœåŠ¡ï¼›ä¸€ä¸ªäººå·¥æ™ºèƒ½å…¬å¸ï¼Œä¹Ÿä¸å¯èƒ½æ²¡æœ‰å¤§æ•°æ®å¹³å°æ”¯æ’‘。 所以,当云计算ã€å¤§æ•°æ®ã€äººå·¥æ™ºèƒ½è¿™æ ·æ•´åˆèµ·æ¥ï¼Œä¾¿å®Œæˆäº†ç›¸é‡ã€ç›¸è¯†ã€ç›¸çŸ¥çš„过程。 Stainless Steel Hexagonal Bar,420 Stainless Steel Hexagonal Bar,Stainless Steel Bar Metal Rod,Stainless Steel Bar Top ShenZhen Haofa Metal Precision Parts Technology Co., Ltd. , https://www.haofametals.com