Deep learning can be translated from one to many images

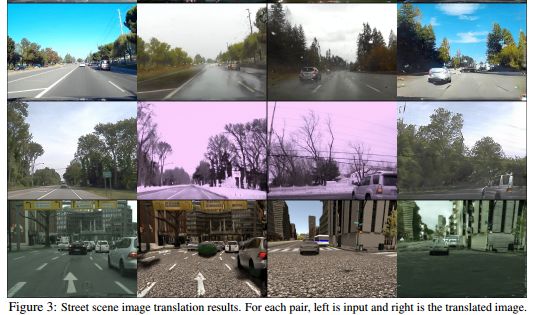

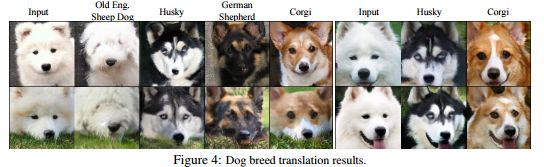

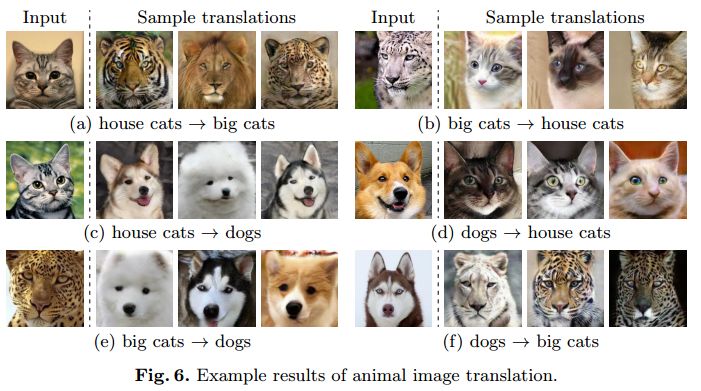

We all know that chameleons can change the color texture of the skin, and today deep learning technology can even transform the image of a cat into an image of a dog, even a lion and a tiger. This algorithm, which can convert a picture into a variety of different targets, not only provides rich material for the production of movies and game scenes, but also can quickly and easily generate rich training data under different road conditions for automatic driving, in order to continuously improve the surface. Ability to different road conditions. One-to-many image translation Earlier researchers discovered that unsupervised methods could be used for image translation to convert one image and video to another. It learns joint probability distributions in different domains by using images from the edge distribution in the independent domain. Researchers have proposed an unsupervised translation framework for images by establishing shared implicit space assumptions and using dual GANs to achieve efficient image translation. In the experiment, the process of image translation of street scenes, animal images, and human faces was performed. With the deepening of research, researchers have developed a new network structure. This multi-modal network structure will be able to convert a picture to multiple different image outputs at the same time. Similar to earlier image translation studies, multimodal graphics translation uses two kinds of deep learning techniques: unsupervised learning and generating confrontation networks (GANs). Its purpose is to give the machine a stronger imagination and allow the machine to be sunny. The street photos turn into different scenes of storms or winter days. This kind of technology has greatly promoted driverlessness. Unlike previous techniques, researchers can not only get an instance of a winter through conversion, but can also get a series of different winter snowfall scenes at the same time. This means that a single piece of data can produce a rich set of data sets covering a wider range of situations. In order to achieve this task of unsupervised image translation, the researchers proposed the Multimodal Unsupervised Image-to-Image Translation (MUNIT), which first assumes that the representation of the image can be decomposed into a domain-independent Encoding, and domain-related style coding can be collected at the same time. In order to achieve the image translation between different domains, the author combines the content encoding with the style coding sampled in the target domain to achieve the output of multiple target samples. This multi-modal unsupervised image translation technique separates the image content and style. For example, for a cat in an image, its pose is the content of the image and the cat type is the style of the image. In the actual translation process, the posture is fixed, and the style differs depending on the target. It can be a dog or a leopard. The posture of the animal remains unchanged, and its style can cover different species from Corgi to Jaguar. The same technique can also be used to generate scene images at different times of day, scenes under different weather conditions or under light conditions. Such a technique can be of great benefit to deep neural networks that require extensive data training. In addition to autopilot and deep learning, multimodal image translation technology can provide game companies with a powerful tool for rapidly creating new roles and a new world. The same artists can also create complex or rich scenes to the machine to deal with, and put more energy into the core of the creation. No data? no problem! This research is mainly based on GANs, a deep learning method that is good at generating visual data. A typical GANs contains two competing neural networks: one for generating an image and the other for judging whether the resulting image looks true or false. GANs show more powerful capabilities when data is scarce. General image translation requires two interrelated data sets: If you need to convert a cat to a dog or other animal, you need to collect photos of the same cat and dog. This kind of data is scarce and sometimes it is impossible to collect it. However, the MUNIT method proposed in this paper breaks through this limitation and greatly increases the use of image translation. It does not need to use one-to-one data to achieve multi-modal transformation. MUNIT can also generate a large amount of training data for autopilot without having to capture the same view point record, and can generate data of various traffic conditions and details at the exact same location of the same point of view. In addition, GANs also eliminate the need for lengthy manual annotation of images or videos, saving a lot of time and money. The authors of the paper expressed the desire to give the machine the same imagination as humans. Just as human beings look at the scenery, regardless of the flowers before the court, we can always imagine the changes of seasons in spring, summer, autumn and winter. When you look at the scenery, Zhaohui Xiyin, Meteorology, and the four seasons cycle all stand on your chest.

We are dedicated charging solution Manufacturer since 2005.

Supply various Power Station including Portable Power Stations, Solar Power Generators, Smallest Generator etc.

Manufacturing high quality products for customers according to international standards, such as CE ROHS FCC REACH UL SGS BQB etc.

To constantly offer clients more innovative products and better services is our consistent pursuit.

portable power stations for camping, solar pow er stations, jackery portable power station TOPNOTCH INTERNATIONAL GROUP LIMITED , https://www.micbluetooth.com